How to Move Fast and Not Break Things

12/07/2022

Written by Gignesh Patel

As many people reading this will know, there’s an old adage in the world of software development that sends shivers down the spines of people like me — a Senior Software QA Engineer at SilverRail.

SilverRail is a global technology business that helps rail operators and travel agencies transform how they serve customers and run their businesses. After joining the company as a day-one engineer back in 2009, I’ve become accustomed to a certain way of working which fills me with confidence and a strong sense of autonomy — two facets of working life that even some of the world’s most successful companies fail to instil.

As the title of this article suggests, I’m of course talking about the infamous mantra to ‘move fast and break things’. While it’s perhaps a little absurd to critique the words of some of the most successful minds in tech, I don’t think I’m alone in thinking that applying this same mindset in many technology businesses would be a recipe for disaster.

Building software solutions that serve the public comes with an important responsibility to deliver reliable, resilient and consistent user experiences that people trust. While the mantra proved effective for a handful of tech companies that harnessed speed over quality to supercharge growth and dominate rapidly-evolving markets, this growth-charged mindset has exposed the tech industry to its fair share of controversy in recent years.

On the contrary, my thirteen-year tenure at SilverRail paints a very different picture of quality-backed growth. As a day-one engineer, I’ve watched the company grow from a small team of ambitious minds to a global team with R&D centres in London, Stockholm, Brisbane and Boston. Despite our growth, the company’s commitment to building sophisticated testing environments remains the beating heart of everything we do.

Despite the challenges of entering new markets and highly-competitive industries, SilverRail’s commitment to testing remains a linchpin to the company’s success and a focus that has shaped my SilverRail journey up to this point.

So, how does SilverRail manage to move fast and not break things? Join me as I dive into the evolution of our testing environments and explore how our quality-orientated approach provides engineers with the confidence they need to build and ship groundbreaking products that are changing the way people move.

The Evolution of QA Testing at SilverRail

The first thing to note is that SilverRail’s advanced testing environments did not magically rise from thin air. As is the case with many companies, the early days required lots of manual processes that led to frustrating delays, the occasional late night, and plenty of head-scratching.

The evolution of SilverRail’s testing environments, however, is a credit to the company’s willingness to listen to its engineers and give them space and resources to build innovative solutions. Specifically, because SilverRail’s ecommerce platform, SilverCore, is designed to simplify train ticket retailing by making it easy for any website to sell rail, we connect with most major rail carrier systems. As a result, one of the biggest QA challenges we faced was our dependency on third parties.

If you’ve ever worked with a product that relies on third-party data, you’ll understand where I’m coming from. When a third party mediates the flow of information between the thing you’re trying to test and the inputs/responses that the test demands, engineers are often left feeling frustrated, confused, and overworked. It can result in significant delays that are typically very difficult to predict or control.

When we first began testing at SilverRail almost thirteen years ago, running a test on a new piece of code would require several QAs and could take anywhere between twelve and forty-eight hours. Why? Because the chances of finding a window of opportunity where all third parties associated with the test were pulling the right data at the right time were slim.

Whether it’s trying to pull ticket information from a rail carrier in a different time zone that doesn’t process requests outside of its working hours or testing a route that has sold out of tickets, removing the dependence on third parties has helped SilverRail condense its testing processes from forty hours to just forty seconds. To date, we’ve developed over 2,600 automated scenario tests that can scrutinise the integrity of our code without engineers worrying about delays or technical issues associated with third parties.

Enhancing ReadyAPI to Generate Complex User Behaviours

SilverCore is designed to significantly reduce the complexities associated with selling train tickets by providing a fully-integrated service that combines shopping, booking, and purchasing tickets across multiple carriers in a single API.

As you can imagine, testing SilverCore in the early days was like opening Pandora’s box. X didn’t speak to Y, Y didn’t speak to X and Z wouldn’t speak to anything at all.

This complexity meant we were unable to find an existing tool that could handle the diverse range of user behaviours we wanted to simulate. As a result, we decided to look internally by building a homegrown testing service that captures and simulates third-party communications.

This in-house tool was built in conjunction with ReadyAPI to help us generate the tests, capture responses, and critically, bypass third parties when we want to rerun these tests. ReadyAPI accelerates the functional, security, and load testing of web services inside your CI/CD pipeline such as RESTful, SOAP, and GraphQL. For us, this meant we could simulate the two-way flow of information between SilverCore and third parties without actually engaging with them.

However, the stock package of ReadyAPI meant we had to hand craft all of the requests that we wanted to simulate. For every new request, we had to state things like how many people were on the booking, what route they were taking, what kind of tickets they wanted, and how much each ticket costs — an extremely time-consuming process that we wanted to automate.

After some head-scratching from the brilliant team of Backend QA Engineers at SilverRail, we invented our own testing-ws service that bolts onto ReadyAPI.

Nowadays, if we want to create a shop request, we simply fill in a bunch of properties: e.g. “I want to go from Boston to New York, I have two adults, and I want to travel seven days from now”, and then we just run a script. Our testing-ws service then takes those properties and generates a shopping request.

Controlling this process means we can repeat the same test at different times on different days and expect the same result. While live data affords an unavoidable level of uncertainty and inconsistency (e.g. the number of tickets available for a certain route will vary day by day or the timetables can differ throughout the week), simulating this workflow provides control and repeatability — the foundations of all good experiments.

I’ll speak more about the role of this repeatability later.

Diversified Tests to Mimic Real-world Scenarios

The world is messy. While it’s relatively easy to build and test code in isolated environments, the reality of putting technology in the hands of customers can reveal a host of unforeseen issues and challenges that require sophisticated testing protocols.

So how does SilverRail use testing to accommodate for the messy world we live in?

As mentioned above, our customised version of ReadyAPI provides us with a highly configurable solution. Specifically, removing the need for hand-crafted shopping requests means we can test a diverse set of user scenarios. While our previous tests would use a handful of standardised booking requests (e.g. one adult travelling from A to B), ReadyAPI means we can test thousands of different booking combinations and uncover more nuanced issues that would have been previously undetected.

Let’s take a look at how these tests work on a practical level…

How Testing Works for SilverCore

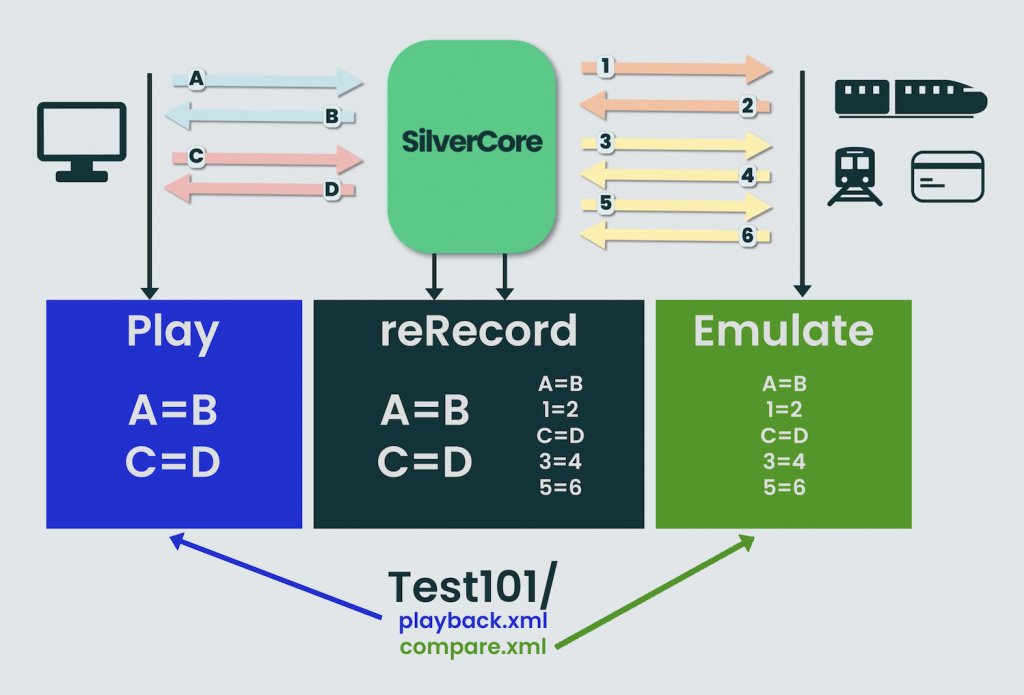

If you watch the video, you’ll see that there are three key components involved in the ‘record’ phase of SilverCore’s testing workflow:

- The ReadyAPI user interface that we use to send requests or receive responses to/from SilverCore

- Third parties that receive a request from SilverCore and return a response

- Documentation to capture what happened during the test

When we generate a request using ReadyAPI (Request A from the video), SilverCore triggers Request 1 which is sent to a third party. The third party then processes this request and returns Response 2 back to SilverCore. SilverCore then computes this response and sends Response B back to ReadyAPI.

At its most basic level, this process serves as a smoke test to detect any inconsistencies between the requests and responses. For example, if we want to simulate a scenario where a customer is searching for one adult rail ticket from London to Edinburgh, we send this request to SilverCore, SilverCore sends a request to the appropriate rail carrier who holds the ticketing information, the rail carrier returns a response to SilverCore, and SilverCore returns a response to ReadyAPI. If everything runs smoothly, we’ll find that the responses match the requests at both modes.

In reference to the video, the two .xml files signify how this comparison process works in practice. After we run a unit test, we record detailed documentation of what happened during the test using two files: a playback file (playback.xml) and a compare file (compare.xml).

The compare file takes all of the information and ‘cleans’ the dataset by filtering out any variable fields that aren’t repeatable between tests. The playback file, however, remains in its raw state — documenting every detail of the test (more on the significance of this later). For the ‘record’ phase, we use the compare file to critique the output of the tests against the inputs documented by ReadyAPI. If we spot any inconsistencies (e.g. A≠B or A=B but 1≠2, we know something has gone wrong and we can trigger diagnostic scripts to pinpoint the error.

You’ll note that the video shows a Request 3 and a Request 5 that correspond with a Response 4 and a Response 6, respectively. This signifies that Request C (generated by ReadyAPI) has triggered SilverCore to send two separate requests to the third party. This could be a customer who’s looking for two adult tickets from point A to point B but perhaps one of the tickets is using a railcard. When the documentation of this test is compared against the original request sent by ReadyAPI, a successful result will show that C=D, 3=4 and 5=6.

Regression Tests: Play, Record, Replay

The beating heart of SilverRail’s testing capabilities is our ability to recycle and repurpose the information we receive from the ‘record’ phase using regression tests.

Instead of simply running a test in isolation and creating bespoke tests every time we add a new feature or deploy tweaked code to the Git pipeline, our implementation of ReadyAPI means we can run a test, record what happens, and then replay this test at a different point in time to compare the two outcomes.

Importantly, we can repeat this process automatically at periodic intervals to continuously assess the integrity of our ever-evolving tech suite — helping us to grow at speed without being blind-sighted by technical vulnerabilities or third-party dependency errors.

How SilverCore’s Regression Tests Work

- As previously mentioned, one of the two files we capture from the initial ‘record’ phase is a raw playback file which we use in our regression tests as stimuli to replay the same scenario against the exact same testing criteria. This replay is processed by a test manager that we built in-house called TestExec.groovy (see Figure 1). TestExec.groovy serves the same purpose as ReadyAPI did in the initial test but it’s tailored specifically to work in our ‘replay’ environment.

- We then take this playback file, load it up in our play space, take the compare file and load it up in our emulation space. From here, we begin replaying all of the requests from the play space. While this happens, the system is also re-recording information from the replay test — if any changes happen, the comparison between a request and response will show a discrepancy and the test will fail.

- We then save this re-recording as a timestamped directory. This new time-stamped directory (Test101_2009-11-30-12-32-00-358/) is compared to the existing Test101/ record. As long as these two recordings match (i.e. for all of the inputs we send to SilverCore, we receive all the same outputs and for all of the inputs we send to third parties, they’re generating the same third-party outputs), we can be confident that any changes to the Git pipeline do not conflict with the parameters we have previously tested.

Figure 1: The Replay Phase of Testing SilverCore

It’s important to note that the same sets of tests that we derive from this regression process are also integrated into our Git pipelines. This means that when pipelines are built, the unit tests are executed, and then deployment can happen. A subset of tests from our bank of over 2,600 scenario tests then run as part of the pipeline before our developers can merge their code into the master — providing a string of regression tests that are executed by the developer, the Git pipeline, and via a nightly run which automatically exercises all of the tests in a given subset.

This triple-walled approach to regression testing means SilverRail’s engineers begin and end each day knowing that their code has been successfully deployed. In the event that an error does occur, our engineers also have visibility over the problem which means they can find a fast and permanent solution without having to crawl through lines of code or implementing fragile ‘quick fixes’.

At a company level, this translates into the efficient deployment of high-quality tech solutions and on a personal level, it means I can close my laptop on a Friday evening feeling confident that I won’t need to open it again until Monday morning. That’s not something that many QAs could say.

Collective Responsibility: Detecting Errors as a Team

As I’m sure many other engineers reading this will testify, building software solutions that impact people’s lives carries a certain sense of responsibility that can be challenging at times. The expectation to maintain near-perfect uptime while also feeling the pressure to push for continuous feature updates is a responsibility that many tech companies fail to recognise or support.

At SilverRail, however, we’ve adopted a collaborative approach to QA and testing that helps to spread the load across a wider team, reduce the margin for error, and create a sense of collective responsibility that seeps through to the core of our close-knit team culture.

On a practical level, I meet with two Software Architects and another member of the QA team every week so that we have a regular sync point to review the performance of our regression tests, identify inconsistencies, brainstorm whether these inconsistencies indicate potential vulnerabilities and if so, trigger a contingency plan to rectify the error. This process also allows us to identify outdated tests that we need to replace with the time-stamped directory that we generate from the regression testing.

For example, if we add a new feature and we receive five extra elements in the shopping response, we will update the test by amending the compare file. We have scripts that allow us to take the results from the time-stamped directory of a replay test and commit this into source control — telling the system that going forward, this is our new gold standard. All future tests will be compared to this new file and then a member of the QA team will vet this amendment to make sure it’s a valid change.

Move Fast & Don’t Break Things With SilverRail

Call me biassed but if my thirteen-year tenure has taught me anything, it’s that working as a Senior Software QA Engineer at SilverRail gives you a level of confidence and support that is hard to find in the tech world.

After identifying the significance of strong testing environments all those years ago, sophisticated testing remains a core pillar of our development approach and SilverRail continues to welcome new ideas from engineers and invest in solutions that help us move fast without compromising on quality.

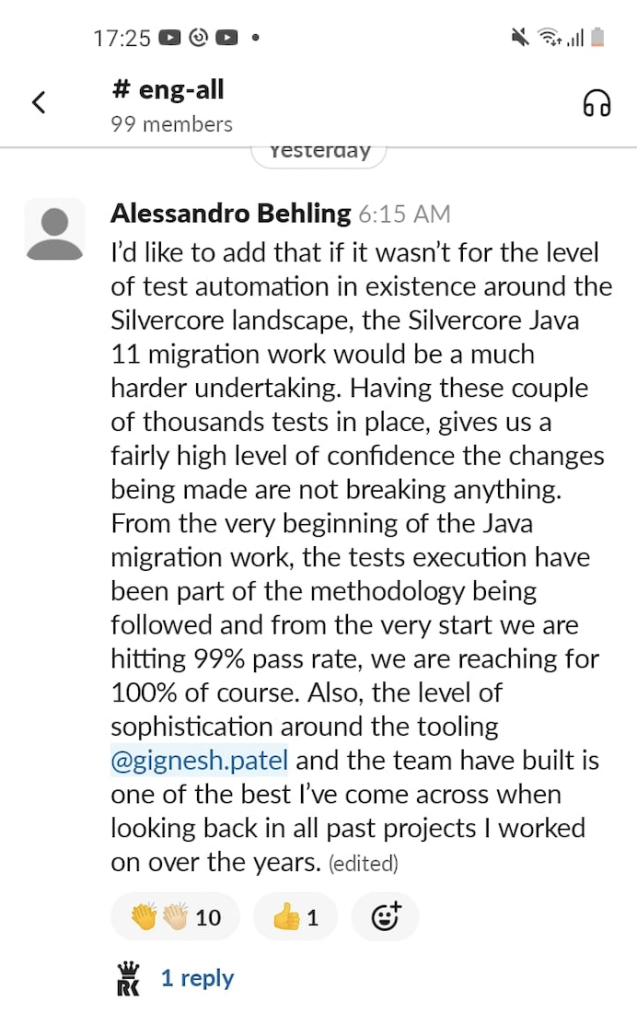

Don’t just take my word for it!

If you fancy some further reading, check out how two Queensland students helped to build a journey planning algorithm that outsmarts Google — it’s a prime example of how SilverRail listens to employees with fresh ideas and takes proactive steps to make them happen.

As we continue on our mission to change the way people move through powerful software solutions, I’m proud to be part of a team that truly believes in its products, values its engineers and continues to deliver quality solutions that make the world a greener place.

Fancy joining us for the ride? Explore our open positions here.